TL;DR Using WireGuard with VRFs under systemd-networkd.

The concept

When using VPNs for all of your traffic, you usually have a “private” network part (inside VPN), and a public one (public/direct internet, not trusted), which needs separation.

From a practical perspective, you might want to have a Linux-based router with an “inner” default network, transported by WireGuard tunnels, and an external network for the internet, over which the VPN data is transported. The Public routing domain shall be routed completely separated from the private routing domain, so no packets can leak between them. No Layer 2 is used, packets in both domains are isolated and shall be routed differently.

I practically use this example for transporting my IPv6-only experimental network over the internet to machines that have no BGP-uplink.

VRFs

Different routing domains are usually realized with VRFs (Virtual Routing and Forwarding). Interfaces (both physical and virtual like VLAN or Tunnel interfaces) can be assigned to a VRF.

You can basically think about VRFs as the “VLAN of Layer 3” (Routing instead of Ethernet Layer).

On Linux, a VRF is a network device with an associated separate routing table. To move an interface into a VRF, set the master to the VRF device.

In the following example, eth1 is in the default VRF, while eth0 is in the WAN VRF:

~# ip a

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vrf-wan state UP group default qlen 1000

[...]

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

[...]

4: vrf-wan: <NOARP,MASTER,UP,LOWER_UP> mtu 65575 qdisc noqueue state UP group default qlen 1000

[...]

By default, connections (both server- and client side), e.g. your SSH Server, happen only in the default VRF. This behavior can be changed by using sysctl for listen sockets, e.g. net.ipv4.tcp_l3mdev_accept=1/net.ipv4.udp_l3mdev_accept=1, which I’d recommend you to do for this use case.

For more details, take a look at the kernel documentation.

Software can move their sockets to a specific device (and thus into an VRF) by using the SO_BINDTODEVICE socket option.

VRFs with WireGuard

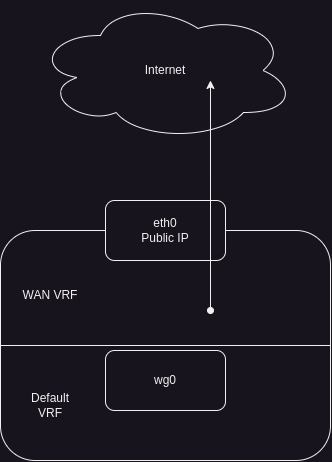

In our example, we have the internal default VRF, and a WAN VRF containing the public Interface & IP.

The traffic transported through a WireGuard tunnel tied to the wg0-Interface shall appear in the default VRF.

Because it is the default VRF, we don’t need to move wg0 into another VRF.

The encrypted tunnel however shall be transported over the internet (in our case eth0), which makes it necessary to somehow move the WireGuard traffic to the “outer”, WAN VRF.

All of this is shown in the drawing below.

Interface & VRF Architecture

Implementation

How to practically implement this? In this post, we will focus on systemd-networkd as network managing daemon, but you can of course use other services like ifupdown.

Problem

WireGuard does not (yet) support binding its socket (were the encrypted Tunnel Traffic appears) to a certain device, meaning it is also not possible to directly terminate this socket in a VRF.

Technically, this is possible (did a POC a few years ago), but this never got upstreamed. This needs a Kernel, Control Plane and netlink protocol change, however.

WireGuard and VRFs

WireGuard Upstream suggests to either use network namespaces (netns) or IP rules with multiple routing tables to manage routing with the “inner/outer” concept.

Network Namespaces are a somewhat different use case than VRFs, providing a much deeper isolation, intended more for containers and less for different routing domains.

The technology behind IP rules is basically what we are using: We’ll let WireGuard use a “fwmark” on its packets, and throw the packets into a VRF (or at least the VRF routing table) when marked.

So why not use IP rules directly/manually? You can of course do that, but in my opinion VRFs are a much more elegant solution from a design & administration perspective.

Configuration with systemd-networkd

For systemd-networkd, we need to create some units and netdevs:

You can also add an alias name for your numeric routing table in /etc/iproute2/rt_tables:`

1000 transit

VRF

With the following files, we create the vrf-wan (attached to the routing table 1000):

/etc/systemd/network/vrf-wan.netdev:

[NetDev]

Name=vrf-wan

Kind=vrf

[VRF]

Table=1000

We also need a network file for the VRF, which brings the VRF device up, and additionally (for WireGuard) redirects packets with the fwmark 1000 to the VRF by installing the IP rule.

/etc/systemd/network/vrf-wan.network:

[Match]

Name=vrf-wan

[RoutingPolicyRule]

FirewallMark=1000

Table=1000

Family=both

The routing policy rule results in the following IP rule:

32765: from all fwmark 0x3e8 lookup transit proto static

transit is the alias for the routing table 1000.

WAN Interface

The following config configures your WAN-Interface and moves it into the VRF:

/etc/systemd/network/eth0.network:

[Match]

Name=eth0

[Network]

VRF=vrf-wan

# here comes your default static IP/DHCP/... config

WireGuard Tunnel

With the netdev file, you’ll create the tunnel interface. The FirewallMark is the fwmark used for the packets, causing the “outer” packets to be moved into the VRF.

RouteTable=off prevents WireGuard from injecting routes, which is usually needed if you have another routing daemon like bird.

/etc/systemd/network/wg0.netdev:

[NetDev]

Name=wg0

Kind=WireGuard

[WireGuard]

PrivateKey=nice_try

RouteTable=off

FirewallMark=1000

[WireGuardPeer]

PublicKey=

AllowedIPs=::/0

PersistentKeepalive=1

Of course, the WireGuard tunnel needs an IP configuration, which is done in the network file:

/etc/systemd/network/wg0.network:

[Match]

Name=wg_e_vultr

[Network]

# here comes your default IP config

Conclusion and Outlook

Summarized, this solution allows a clean WireGuard configuration with VRFs and therefore different routing domains.

The only downside is that you need to use the fwmark workaround (no real VRF config), which could be fixed by patching WireGuard to natively support VRFs.