TL;DR Deploy your own Forgejo runner in an IPv6-only Kubernetes cluster by patching the docker daemon.json

🚄 This post was supported by EC164 “Transalpin” and EC112 “Blauer Enzian”

Why Codeberg/Forgejo? What are the actions for?

Recently, I’ve started to migrate some of my git repositories from GitHub to Codeberg, which is a Forgejo instance, as I’m more comfortable with a community-driven Git & Development platform.

Forgejo offers lots of features known from similar platforms (like PRs, Issues, and Actions), but obviously with limited resources (and sometimes features) due to the nature of the relatively young ecosystem.

In the past, I’ve used GitHub Actions for various tasks:

- building and deploying this website

- general lint, test & deploy pipelines (e.g. for pkg-exporter, which is also in the process of being moved to Forgejo as I’m writing this)

- building container images

I’m also planning on introducing some new tools using this CI, hopefully making my life easier:

- Renovate Bot should help me to keep all the dependencies up to date (and runs quite often in a scheduled CI job)

- Copier, which should help me to template all the “boilerplate” for my python projects, and keep them up to date using renovate. We’ll see how this works though ;)

Self-Hosting Forgejo actions

As I expect quite a number of jobs running, I’ve decided to use my own runner. Codeberg offers both Woodpecker CI and Forgejo Actions. Woodpecker CI is the “more recommended” but “external” choice, while Forgejo Actions are not production-grade yet, although “natively” integrated and somewhat compatible with GitHub actions syntax. I decided to start with the later one.

Forgejo Actions has some “missing” features (like native Kubernetes compatibility), but more on that later.

Forgejo Runners

Forgejo Action Jobs are executed by Forgejo Runners, which run separately and execute each workflow.

Runners are registered to Forgejo using a token, e.g. on the Account-Level. With that, runners are available to all repos in that account - for more details, take a look on the documentation.

Forgejo runners usually rely on LXC, docker or podman for isolation of the jobs, so one of these is required. The jobs could theoretically also run without isolation on the host, but that’s not recommended.

IPv6 Kubernetes

As I don’t want to build “manually crafted container landscapes” any longer, I’m maintaining a Kubernetes Cluster in my IPv6 only ecosystem, easily deploying all services I need using helm charts. At least sometimes, until it isn’t easy anymore. Like with this project.

IPv6 only means, the Kubernetes Pod has no Legacy IP address (also known as IPv4), only an IPv6 one. No 464XLAT in the container, just IPv6. Outside targets can be reached using DNS64/NAT64 (done outside the cluster), but there is no native Legacy IP connectivity. Usually, this works fine, as long as applications try to use IPv6 - Legacy IP only destinations in the internet are handled by NAT64/DNS64 transparently to the application.

Forgejo Runner on Kubernetes

Thankfully, there is a helm chart for Forgejo runners by wrenix, which does all the setup for us. Due to the current architecture of Forgejo runners, there is both the Forgejo runner container and a “docker in docker”-container in the same pod, as Kubernetes is not natively supported yet.

Each Job runs as a docker container in the “docker in docker” (also called “dind”) container, triggered by the Forgejo runner communicating with the dind-container.

In the following diagram, you can see the rough setup of the Forgejo runner, as well as the network connectivity.

flowchart TD

internetv6(["Internet IPv6/NAT64/DNS64"])

subgraph cluster["Kubernetes Cluster"]

pod

clusterv6(["2001:db8::/64"])

end

clusterv6 --> internetv6

subgraph pod["Pod"]

forgejo["Forgejo Runner Container"]

docker

podv6(["Pod IP 2001:db8::dead:beef/64"])

podv6 --> clusterv6

forgejo <-->|Docker Socket| docker

end

subgraph docker["Docker in Docker"]

job --> ipv4

job["Job Container"]

ipv4(["172.17.0.0/24"])

end

No connectivity

But there is one problem: The docker in docker container does the default docker networking, which means Legacy IP only.

Once I start the job, everything runs fine until the job tries to reach something on the internet. That’s usually the first step: Trying to check out the git repo.

As seen in the diagram above, the “inside docker network” with all the Jobs is completely isolated from the “outer” IPv6-only network, which provide the needed internet connectivity.

Solution: IPv6 in Docker, patched through the helm chart

What to do about this? One solution is of course native IPv6 only connectivity for the docker network.

I quickly thought about an “CLAT/NAT46 Sidecar” in the pod too (enabling native Legacy IP connectivity), but this would be complex if doable at all. Also, the jobs still wouldn’t talk modern IP.

The goal is clear: Enable IPv6 for the docker in docker, while configuring everything without needing to patch the helm chart too much.

Enable IPv6 in Docker

IPv6 in docker is unfortunately still not enabled by default, and needs to be configured e.g. in the docker daemon. The documentation has a few examples.

I ended up with the following configuration for the daemon.json, to have everything permanent:

{

"ipv6": true,

"ip6tables": true,

"fixed-cidr-v6": "fd00:d0ca:1::/64",

"default-address-pools": [

{ "base": "172.17.0.0/16", "size": 24 },

{ "base": "fd00:d0ca:2::/104", "size": 112 }

],

}

All the options are explained in the dockerd documentation.

Of course, ipv6 is activated.

Now we need to distribute IPs, in this case ULAs:

When the docker default network is used, containers get an IP inside fd00:d0ca:1::/64.

However, as the Forgejo runner can be configured to create its own docker network per job, the default-address-pools are relevant: Non-default networks are provided with IPs out of these pools (if they don’t have a specific configuration, which Forgejo doesn’t have).

Because I do not want to route public IPs into the Forgejo runner pod, I unfortunately needed to use NAT to connect the private v6 to the public pod IP. This is done using the ip6tables option, which lets docker handle all the firewall (including NAT) stuff for IPv6.

The result looks like this, you can see the masquerade action both for the default docker network (fd00:d0ca:1::/64) and the Forgejo runner network (fd00:d0ca:2::/112):

(dind-container)/ # ip6tables -L -v -t nat

Chain PREROUTING (policy ACCEPT 3947 packets, 316K bytes)

pkts bytes target prot opt in out source destination

0 0 DOCKER all -- any any anywhere anywhere ADDRTYPE match dst-type LOCAL

Chain INPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 25236 packets, 2431K bytes)

pkts bytes target prot opt in out source destination

0 0 DOCKER all -- any any anywhere !localhost ADDRTYPE match dst-type LOCAL

Chain POSTROUTING (policy ACCEPT 25236 packets, 2431K bytes)

pkts bytes target prot opt in out source destination

0 0 MASQUERADE all -- any !br-fcd61e14eeb8 fd00:d0ca:2::/112 anywhere

0 0 MASQUERADE all -- any !docker0 fd00:d0ca:1::/64 anywhere

Chain DOCKER (2 references)

pkts bytes target prot opt in out source destination

0 0 RETURN all -- br-fcd61e14eeb8 any anywhere anywhere

0 0 RETURN all -- docker0 any anywhere anywhere

With this configuration, every job should now get a IPv6 address and reach the internet. Legacy IP is still there, but not routed anywhere.

flowchart TD

internetv6(["Internet IPv6/NAT64/DNS64"])

subgraph cluster["Kubernetes Cluster"]

pod

clusterv6(["2001:db8::/64"])

end

clusterv6 --> internetv6

subgraph pod["Pod"]

forgejo["Forgejo Runner Container"]

docker

podv6(["Pod IP 2001:db8::dead:beef/64"])

podv6 --> clusterv6

forgejo <-->|Docker Socket| docker

end

subgraph docker["Docker in Docker"]

job --> ip

job["Job Container"]

ip(["172.17.0.0/24 fd00:d0ca:2::1/112"])

end

ip -->|Docker ip6tables magic| podv6

As my Kubernetes cluster has working DNS64/NAT64, theoretically the whole internet is reachable now.

Persisting the daemon.json

After we’ve figured out the configuration, we need to bring up every dind container with the correct daemon.json.

The helm chart for Forgejo runners provides a way to do this: mounting the daemon.json into the dind container/runner pod.

First, we need to create a configmap with the daemon.json content:

apiVersion: v1

kind: ConfigMap

metadata:

name: etc-docker

data:

daemon.json: |

{

"ipv6": true,

"ip6tables": true,

"fixed-cidr-v6": "fd00:d0ca:1::/64",

"default-address-pools": [

{ "base": "172.17.0.0/16", "size": 24 },

{ "base": "fd00:d0ca:2::/104", "size": 112 }

],

}

This configmap can be mounted into the dind container using the helm chart values, which are prepared for additional mounts:

volumes:

- configMap:

defaultMode: 420

name: etc-docker

name: etc-docker

volumeMounts:

- mountPath: /etc/docker

name: etc-docker

With the chart rolled out, every pod is started with the /etc/docker/daemon.json file available.

I also documented this in the README of the helm chart.

Of course, the Forgejo runner also needs ipv6 set to true in the helm values:

runner:

config:

token: (...)

file:

container:

enable_ipv6: true

Unhappy eyeballs: No more Legacy IP

Now, everything is fine, one would think. The internet connectivity is available using IPv6 and DNS64/NAT64, Legacy IP can be ignored. All connections should be using “Happy Eyeballs”, preferring IPv6 while using “what actually works”.

But unfortunately, this is not the case. Some actions, for example the upload-artifact, stick to Legacy IP if there is an IPv4 available (not trying IPv6 at all), and subsequently the action fails with a timeout.

What to do about this? It would be nice to fix happy eyeballs for all the actions, but that is not realistic.

Thankfully, it is now possible to disable Legacy IP for docker networks.

This currently needs the experimental-Flag for docker, and needs to be set as a default for new networks.

The com.docker.network.enable_ipv4 option seen below disables IPv4 for all newly created bridge networks, which is the kind of network Forgejo runner creates for each job.

In the end, the docker daemon.json looks like this:

{

"ipv6": true,

"experimental": true,

"ip6tables": true,

"fixed-cidr-v6": "fd00:d0ca:1::/64",

"default-address-pools": [

{ "base": "172.17.0.0/16", "size": 24 },

{ "base": "fd00:d0ca:2::/104", "size": 112 }

],

"default-network-opts": {

"bridge": {

"com.docker.network.enable_ipv4": "false"

}

}

}

flowchart TD

internetv6(["Internet IPv6/NAT64/DNS64"])

subgraph cluster["Kubernetes Cluster"]

pod

clusterv6(["2001:db8::/64"])

end

clusterv6 --> internetv6

subgraph pod["Pod"]

forgejo["Forgejo Runner Container"]

docker

podv6(["Pod IP 2001:db8::dead:beef/64"])

podv6 --> clusterv6

forgejo <-->|Docker Socket| docker

end

subgraph docker["Docker in Docker"]

job --> ip

job["Job Container"]

ip(["fd00:d0ca:2::1/112"])

end

ip -->|Docker ip6tables magic| podv6

As we can see: No more legacy IP, every application that can do IPv6 sockets (hopefully all, its 2025), should work.

Verifying the setup

But how to verify the setup?

I’ve built a simple workflow and placed it in the repo from where I’m deploying my helm charts:

name: test ipv6 buildenv

on:

push:

jobs:

build-document:

runs-on: docker

steps:

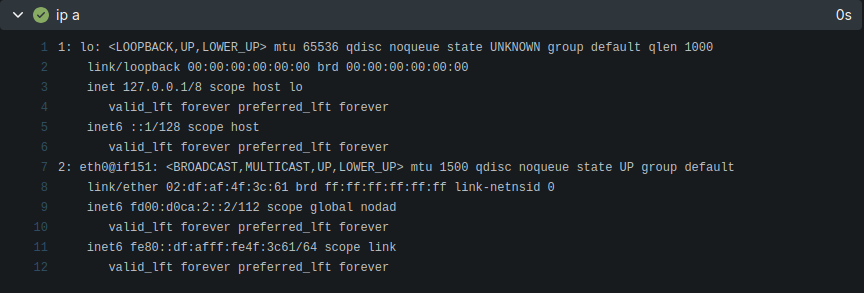

- run: apt-get update && apt-get install iproute2 -y

- run: ip a

After enabling actions, you should see something like this: ip a output showing only IPv6 inside the job container

Conclusion and Outlook

Yes, you can run Forgejo runners inside Kubernetes with IPv6 only (and Dual stack), but it’s still a bit of work.

Overall, it works fine for me, but still feels a little bit hacky.

It would be of course nice if stuff becomes easier and enabled by default over time.

Especially, the runner moving away from “Docker in Docker” would be nice, which is a hacky solution for kubernetes clusters. In the future, the Forgejo runner could act as some kind of controller, and every job natively gets its own Kubernetes pod freely scheduled in the cluster. But this needs some development, mainly in the forgejo runner, of course.

Until then, docker in docker/kubernetes patched for modern IP it is.